CURRENTLY VIEWING |

||||||

|

A.I.FIGHTER

A simple versus-fighting game where the A.I. learns the moves of the human player

|

||||||

|

||||||

|

@

projects > games

|

||||||

|

SECTION MENU

|

||||||

A.I. Fighter is a simple versus-fighting game which makes use of artificial intelligence. The computer fighter is able to make use of a neural network to sample the fighting style of the human player and evolve itself to learn good moves from the player and eliminate loopholes. The game is designed such that we can see the progress of the computer fighter as time passes. This is done by including game moves such as combo moves (a sequence of 4 specific moves), blocks, evades etc.

The computer fighter starts with a blank state (i.e. doesn’t move) and will sample the fighting style of the human character as the game is played. At the end of each round, all the sampled data is fed into the neural network for the computer fighter to evolve.

The introduction video for A.I.Fighter (includes some technical details)

The starting screen for each round

Player throws a punch at the enemy

A.I. making a move

The ending screen

In order to understand some of the technical details covered later sections, you will need to know the game play and the rules.

Overview

A.I. Fighter is a 3D beat-‘em-up style fighting game in which the player will control a fighter using the keyboard and engage the computer’s fighter over a short duration. The fighter can either win by a K.O. (knockout) of his opponent, or by having more health than his opponent after the time runs out.

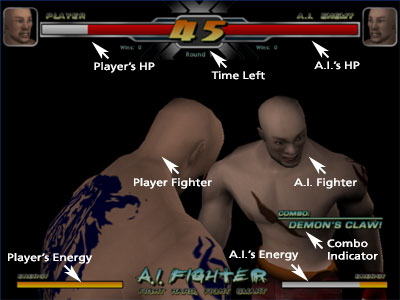

The interface of A.I.Fighter

Energy

Except for Block and Idle, fighters will expand energy from their energy bar when moves are made. When the energy bar is depleted, the fighter can no longer make any moves, except blocking or idling. This is the cost of attacking or evading. When blocking, a fighter’s energy bar will not recover. This is to discourage ultra-defensive techniques. In addition, fighters with low energy will find their attacks having less and less impact on the opponent’s health. This is to discourage players from randomly smashing the keyboard and to encourage the prudent and calculated use of moves to deal maximum damage to the opponent while sustaining minimal energy loss to the player.

Moves

Fighters in A.I. Fighter can execute the following moves:

- Heavy Attack – Four directions, Left, Right, Up and Forward.

- Light Attack – Four directions, Left, Right, Up and Forward. A light attach is faster than a Heavy Attack and expends less energy, but deals less damage.

- Block – Fighter takes less damage when blocking but energy will not be recovered.

- Evade – Fighter takes no damage during the short period of evasion. Evasion consumes a large amount of energy so that players cannot evade and become invulnerable for a long period of time.

- Idle – Fighter will be vulnerable to attacks, but can recover energy lost.

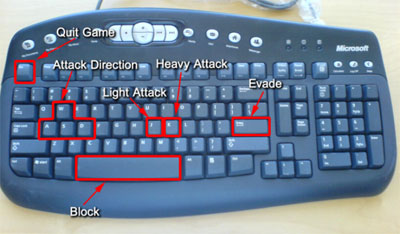

Keyboard controls for the game

|

Action |

Direction |

Keyboard Press |

Energy Cost |

|

Light Attack |

Left Light Attack (LLA): |

A + J |

8 |

|

Right Light Attack (RLA): |

D + J |

8 |

|

|

Up Light Attack (ULA): |

W + J |

8 |

|

|

Forward Light Attack (FLA): |

J |

8 |

|

|

Heavy Attack |

Left Heavy Attack (LHA): |

A + K |

10 |

|

Right Heavy Attack (RHA): |

D + K |

10 |

|

|

Up Heavy Attack (UHA): |

W + K |

10 |

|

|

Forward Heavy Attack (FHA): |

K |

10 |

|

|

Block |

(Blocks all directions) |

Spacebar |

0 |

|

Evade |

(Evades all attacks) |

Enter |

15 |

Combos

In addition to light and heavy attacks, players may chain together a combination of light and heavy attack moves in order to do a combo. The execution of combos requires specific sequences of key-presses by the player, but rewards the player with a higher-damage attack. Combos that are easier to execute will deal less damage than those that are harder to execute.

|

Combo Name |

Move sequence |

Keyboard Press |

Damage of Last Attack |

|

One-Incher |

FLA, FLA, FLA, FHA |

J, J, J, K |

7 |

|

Twin-Edge |

LLA, RLA, LHA, RHA |

A+J, D+J, A+K, D+K |

10 |

|

Face-Smasher |

ULA, UHA, RHA, LHA |

W+J, W+K, D+K, A+K |

12 |

|

Demon’s Claw |

LLA, FHA, ULA, RHA |

A+J, K, W+J, D+K |

15 |

A.I. executes the Face Smasher combo successfully

Hyper Mode

When the Health level of a fighter drops to 20 and below, he enters into Hyper mode. In Hyper mode, the damage dealt to the opponent is doubled.

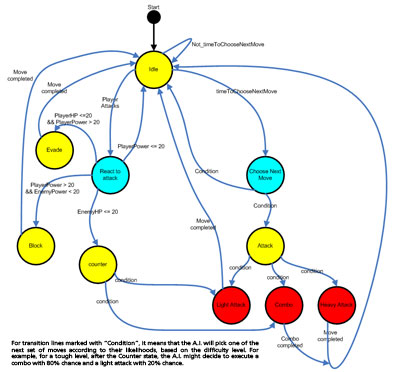

We implemented two versions of the game A.I. The first was implemented with a Finite State Machine (FSM) which controls the actions taken by computer-controlled fighters using a determined set of rules and conditions. The set of finite states in our FSM-version of the game is as belows:

The graph showing the set of states and transitions for the FSM version of the game. Click on the image to view larger.

The main deficiency with the widely-used FSM A.I. model is the predictability in the game-play. The finite set of states implies that the replay value of the game is limited. After a while, the player will be able to spot the patterns in the A.I. fighter’s moves and counter them.

Furthermore, the level of difficulty of the game is set at fixed intervals with the FSM A.I. model. Although players can usually set the difficulty level in games, this is usually an integral multiplier of the A.I. fighter’s attributes such as reaction time and probability of executing highly-damaging moves. In addition, the A.I. fighter has an unfair advantage over the player in being able to respond almost immediately to user input.

To develop games that have higher replay values and will adapt to the player’s level of skill, we decided to implement the second version of the game with machine learning. After researching on the various machine learning methods such as decision trees and neural networks, we decided to use neural networks for our A.I. model. Given the large number of inputs (e.g. player’s moves, time left, player’s health, etc) and the large number of possible outputs the A.I. fighter can take (e.g. countering, evading, idling, etc), a neural network model was deemed very suitable.

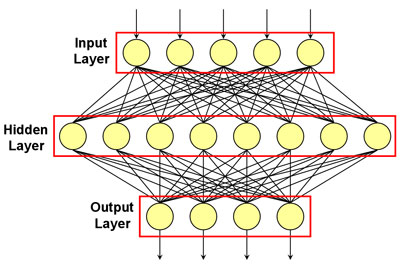

The neural network that was setup is a simple feed-forward back-propagation network which undergoes supervised learning.

A three-layered neural network. Data flows from the input layer to the output layer through the hidden layer. The yellow circles represent neurons. The number of neurons used in the actual game has been reduced in this image.

The A.I. will make reactions based on certain variables in the game, namely, the 15 normalized inputs we have identified which will form the input nodes in the first layer. Some of the 15 inputs include HP of player/A.I., energy of player/A.I., time remaining, previous move of player/A.I. etc.

We have also identified 11 possible output variables that will correspond to the 11 nodes in the output layer which represent evade, block, left heavy attack, up light attack etc. Each output will eventually be assigned a floating-point value between 0 and 1. The output node with a value higher than any other output node will be the A.I.’s determined action. We consider this to be the node that has fired.

The number of nodes to be used for the 2nd layer, that is the hidden layer, does not follow any set formula. The value was determined by following a few rules of thumb, such as “two-thirds of the total number of input and output nodes.” These rules of thumb were derived from empirical data that other programmers have used. Therefore our neural network in A.I. Fighter has the following structure:

|

Number of layers:

|

3 |

|

1st Layer:

|

15 input nodes |

|

2nd Layer:

|

20 nodes in hidden layer |

|

3rd Layer:

|

11 output nodes |

As each round progresses, we will sample data inputs and outputs to train the A.I. to emulate some of the good moves made by the player. Also, if the A.I. makes a good move, we will sample that so that the A.I. will learn to repeat it in future rounds. For example, to sample the player’s counter attacks and make the A.I. emulate them, we will sample the instance whereby the A.I. does a Heavy Attack, and the player interrupts the attack with a Light Attack.

Skeel Lee: Setting up of game (code architecture, overlays etc), game play (combo moves, damage done per move etc), modeling of fighters, texturing, rigging, animation

Lim Zhen Qin: Integrating of neural networks, sampling of human player inputs

Neo Ming Feng: Finite State Machine (FSM) version of the game